Finetune LLAMA 3

October 5, 2024

•

Johan Backman

In this article, we're going to show you how easy it is to fine tune llama on your own data. We'll walk you through the steps and show you how to do it on Trainwave.

Prerequisites

You'll need:

- A trainwave account with some credits

- A Huggingface account + API Key

- (Optional) A wandb account + API Key

You need to expose the WANDB_API_KEY and HF_TOKEN in your environment. You can do this by running:

export WANDB_API_KEY=yourkey

export HF_TOKEN=yourkey

Step 1: Create a project and configure it

In order to run on trainwave, you'll have to first configure your project. To do so you can do the following:

mkdir llama3-ft && cd llama3-ftwave config

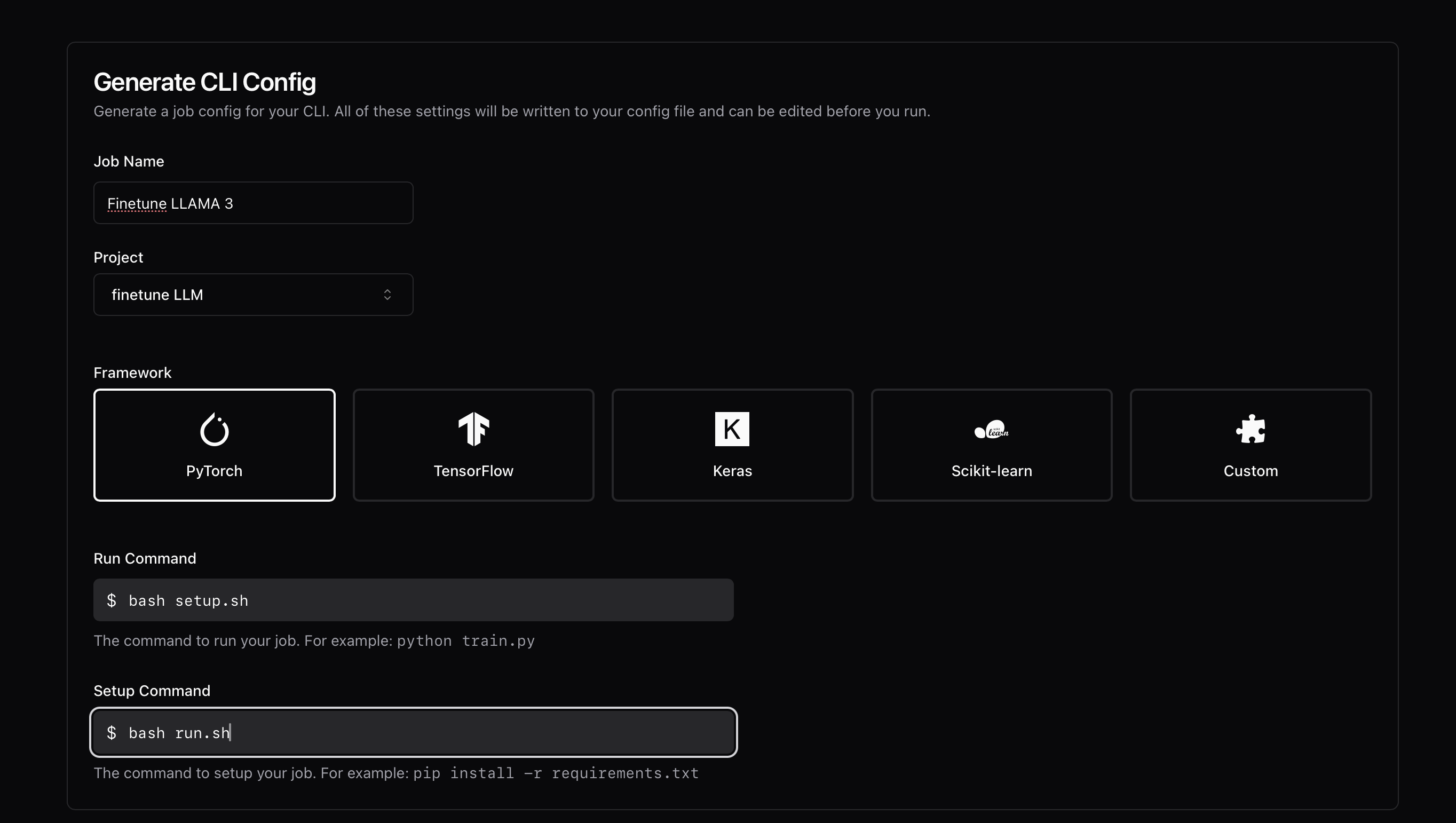

Fill in the details as shown below:

Now let's pick our GPU that we want to train on. The training code is already set up for Multi-GPU training, so you can pick multiple GPUs if you want (or just one depending on your patience). In my case I'm going to pick 4 A100s.

Once you hit save config, it will store a local file in your project folder with something similar to this:

name = "Finetune LLAMA 3"

project = "p-abc123"

framework = "PyTorch"

gpu_type = "NVIDIA-A100-80GB"

gpus = 4

setup_command = "bash run.sh"

run_command = "bash setup.sh"

organization = "o-gzbqmple"

image = "trainwave/pytorch:2.3.1"

We will add two more lines to the config file. This ensures that the machine we start reads in your Huggingface token and the wandb api key.

env_vars.WANDB_API_KEY = "${WANDB_API_KEY}" # This will read from our own env

env_vars.HUGGINGFACE_TOKEN = "${HF_TOKEN}" # This will read from our own env

hdd_size_mb = 40000 # Specify the disk size you need

The final config file should look like this:

name = "Finetune LLAMA 3"

project = "p-abc123"

framework = "PyTorch"

gpu_type = "NVIDIA-A100-80GB"

gpus = 4

setup_command = "bash run.sh"

run_command = "bash setup.sh"

organization = "o-gzbqmple"

image = "trainwave/pytorch:2.3.1"

env_vars.WANDB_API_KEY = "${WANDB_API_KEY}"

env_vars.HUGGINGFACE_TOKEN = "${HF_TOKEN}"

Step 2: Create code files

- Add a

train.pyin your directory with contents from Appendix A - Add the following two script files

run.sh

This is the script that we specified in the run_command. It will run after the setup is done.

#!/bin/bash

tune download meta-llama/Meta-Llama-3-8B \

--output-dir /workspace/base_model/ \

--hf-token $HUGGINGFACE_TOKEN \

--ignore-patterns "original/consolidated*"

python train.py

setup.sh

This is the script that we specified in the setup_command. It will run before the run command.

#!/bin/bash

pip install -U transformers datasets accelerate peft trl bitsandbytes wandb torchtune torchao

Step 3: Launch

With your 3 files ready, you can now launch the training job.

wave jobs launch

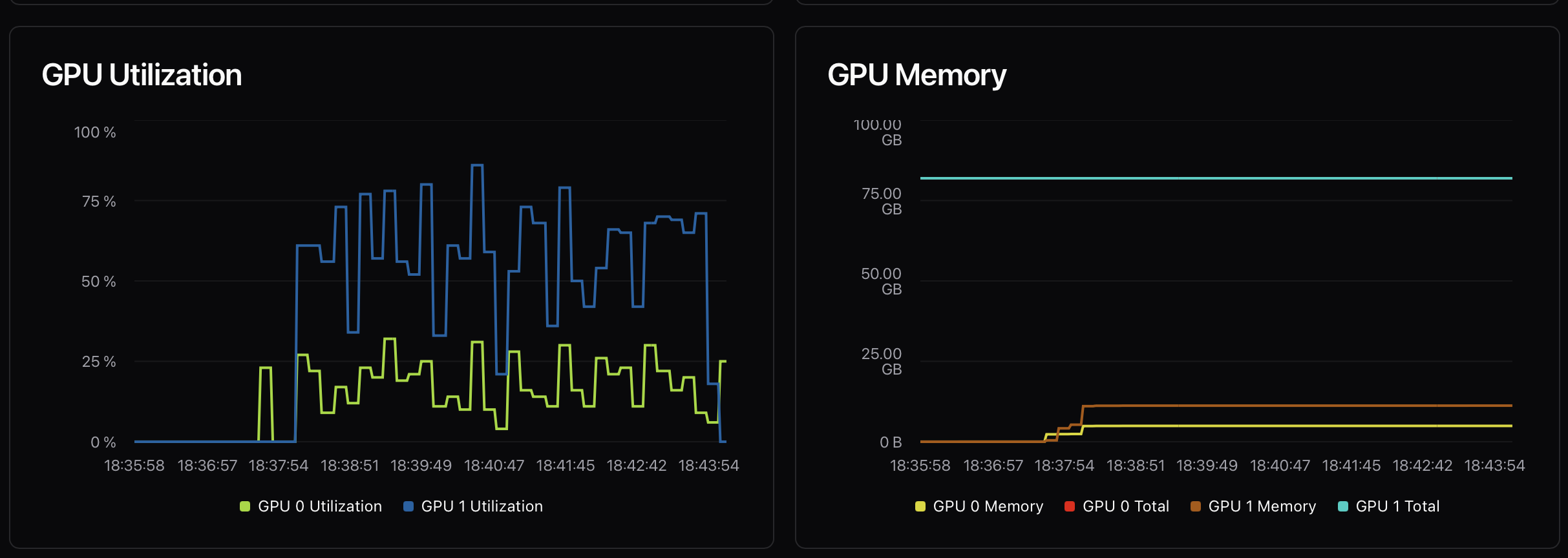

We can also view the logs and metrics now. For instance we clearly see that we could use a bigger batch size because we're barely using any GPU memory.

Appendix

Appendix A: Training code

import os

import torch

import wandb

from datasets import load_dataset

from huggingface_hub import login

from peft import (

LoraConfig,

PeftModel,

get_peft_model,

prepare_model_for_kbit_training,

)

from transformers import (

AutoModelForCausalLM,

AutoTokenizer,

BitsAndBytesConfig,

HfArgumentParser,

TrainingArguments,

logging,

pipeline,

)

from trl import SFTTrainer, setup_chat_format

# Intiailize authenticated libraries

hf_token = os.getenv("HUGGINGFACE_TOKEN")

wb_token = os.getenv("WANDB_API_KEY")

login(token=hf_token)

wandb.login(key=wb_token)

run = wandb.init(

project="Fine-tune Llama 3 8B on Medical Dataset",

job_type="training",

anonymous="allow",

)

# Set up parameters

torch_dtype = torch.float16

attn_implementation = "eager"

# QLoRA config

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch_dtype,

bnb_4bit_use_double_quant=True,

)

# This is where the base model is stored, we will

# download it in the setup step. We can assume it's there for now.

base_model = "/workspace/base_model/"

# Load the model

model = AutoModelForCausalLM.from_pretrained(

base_model,

quantization_config=bnb_config,

device_map="auto",

attn_implementation=attn_implementation,

)

# Load the tokenizer

tokenizer = AutoTokenizer.from_pretrained(base_model)

model, tokenizer = setup_chat_format(model, tokenizer)

# Set up the model parameters (Lora)

new_model = "/workspace/job/output/llama-3-8b-chat-doctor"

peft_config = LoraConfig(

r=16,

lora_alpha=32,

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM",

target_modules=[

"up_proj",

"down_proj",

"gate_proj",

"k_proj",

"q_proj",

"v_proj",

"o_proj",

],

)

model = get_peft_model(model, peft_config)

# HF dataset that we want to fine tune on

dataset_name = "ruslanmv/ai-medical-chatbot"

dataset = load_dataset(dataset_name, split="all")

dataset = dataset.shuffle(seed=65).select(

range(1000)

) # Only use 1000 samples for quick demo. TODO: Remove this if you want to train on the full dataset

# Function to define the input data from the dataset

def format_chat_template(row):

row_json = [

{"role": "user", "content": row["Patient"]},

{"role": "assistant", "content": row["Doctor"]},

]

row["text"] = tokenizer.apply_chat_template(row_json, tokenize=False)

return row

dataset = dataset.map(

format_chat_template,

num_proc=4,

)

dataset = dataset.train_test_split(test_size=0.1)

# Define all training arguments

training_arguments = TrainingArguments(

output_dir=new_model,

per_device_train_batch_size=1,

per_device_eval_batch_size=1,

gradient_accumulation_steps=2,

optim="paged_adamw_32bit",

num_train_epochs=1,

evaluation_strategy="steps",

eval_steps=0.2,

logging_steps=1,

warmup_steps=10,

logging_strategy="steps",

learning_rate=2e-4,

fp16=False,

bf16=False,

group_by_length=True,

report_to="wandb",

)

trainer = SFTTrainer(

model=model,

train_dataset=dataset["train"],

eval_dataset=dataset["test"],

peft_config=peft_config,

max_seq_length=512,

dataset_text_field="text",

tokenizer=tokenizer,

args=training_arguments,

packing=False,

)

trainer.train()

wandb.finish()

model.config.use_cache = True

# Run a test on the model

messages = [

{

"role": "user",

"content": "I have a bad headache. How do I get rid of it?",

}

]

prompt = tokenizer.apply_chat_template(

messages, tokenize=False, add_generation_prompt=True

)

inputs = tokenizer(prompt, return_tensors="pt", padding=True, truncation=True).to("cuda")

outputs = model.generate(**inputs, max_length=150, num_return_sequences=1)

text = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(text.split("assistant")[1])

# Save model

trainer.model.save_pretrained(new_model)